Art Making Machines

By Ruby Thelot

I. From Production to Reproduction

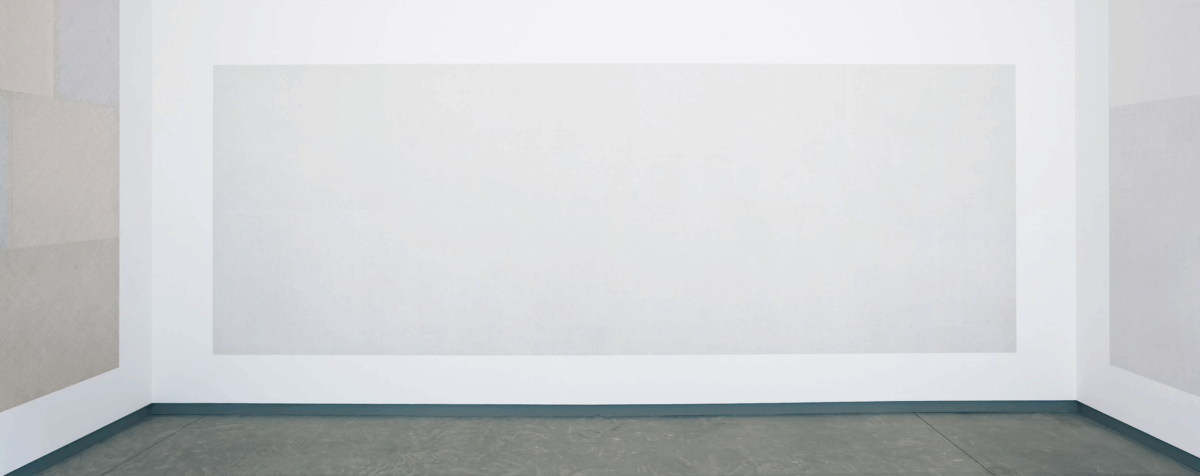

“A wall divided horizontally and vertically into four equal parts. Within each part, three of the four kinds of lines are superimposed.”

These are the instructions given to Jerry Orter, Adrian Piper, and Sol LeWitt to execute Wall Drawing 11 at Paula Cooper Gallery in New York in 1969, by LeWitt himself. A clear list of steps—prescriptive and executable. Drawing distilled into idea. Idea existing as a set of rules. Pure concept. The role of the pre-set rules is to “[avoid] subjectivity,” LeWitt explains in his 1967 Artforum essay, “Paragraphs on Conceptual Art”. He adds that, “In conceptual art the idea or concept is the most important aspect of the work. When an artist uses a conceptual form of art, it means that all of the planning and decisions are made beforehand and the execution is a perfunctory affair. The idea becomes a machine that makes the art.”

Notably, though the idea makes the art, the hand still produces it.

In “Postmodernism or the Cultural Logic of Late Capitalism,” the literary critic and philosopher Frederic Jameson traces how late capitalism transitions from an economy of production, where one makes objects, artworks, and commodities, where the output is a product, to an economy of reproduction, where signs, images, and ideas are the ever-circulating outputs. Rules-based algorithmic art exists within this postmodernist transition, where the artist produces the idea and the machine becomes the reproducer. Although the term “machine” is used abstractly here, it represents the externalization of the production process to an entity exogenous to the mind that generated the idea. At first, that entity is the hand, acting mechanically, in the case of LeWitt, often with guides around his pencils, but soon, this entity becomes the computer. This secondary transition is best exemplified in the work of Hungarian artist Vera Molnár.

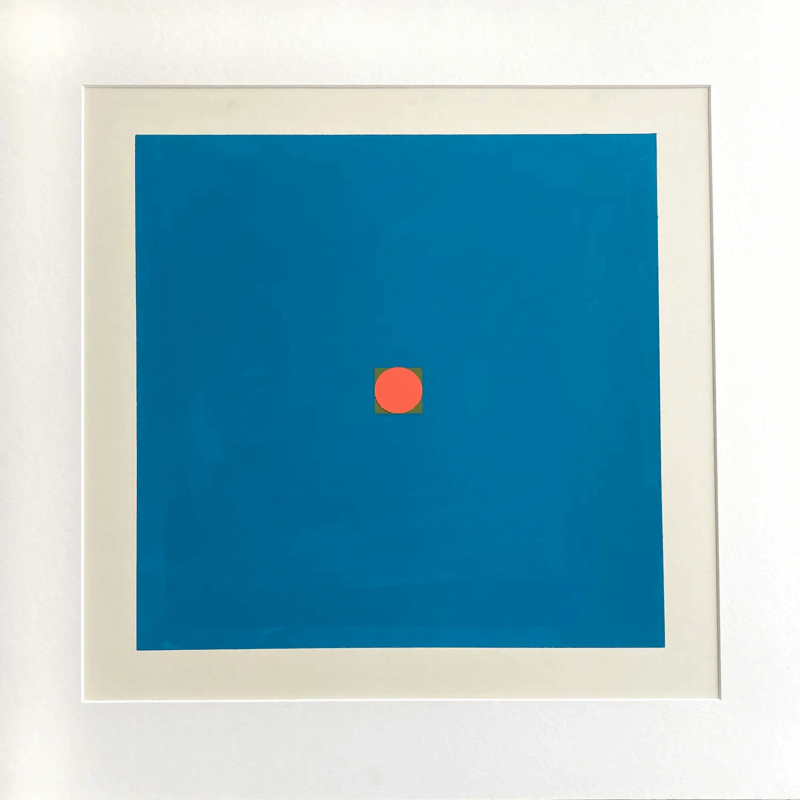

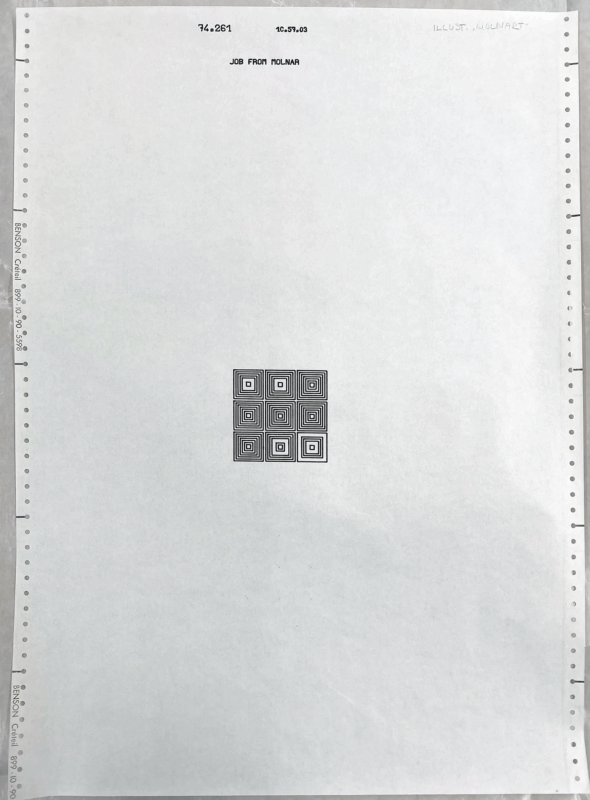

In 1959, Molnár began working with an “imaginary machine” (machine imaginaire). This abstract construction was fed simple algorithms that directed the movement and positions of shapes on the page. She then executed the algorithms by hand. We can imagine that the instruction for Icônes au Carré Rond (1965) resembled the ones from LeWitt’s Wall Drawings in form: “A square canvas, blue, in the middle, a smaller square, green, inside that square, a circle, orange.” Molnár would have to wait almost a decade to get access to a computer. Through her friendship with composer Pierre Barbaud, who introduced her to the French company Bull, she got to work with her first mainframe computer in 1968. During the 1970s, Molnár used an IBM mainframe and a plotter with which she produced a series in honor of her friend. Hommage à Barbaud (otherwise known as (Des)Ordres) is a group of works experimenting with compositions of concentric squares that follow similar algorithms to the ones she fed her imaginary machine.

Notably, though the idea still makes the art, now the computer produces it.

Though LeWitt claimed that “Conceptual art doesn’t have much to do with mathematics,” he seems to omit that the rules he set out to create, his so-called concept, are perfectly legible to machines—that computers work through similar rule-sets that guide their actions in programs—because his works have a mathematical bent. A. Michael Noll, a Bell Labs engineer and one of the first computer artists, wrote in his essay “Computers and the Visual Arts” that “The computer is extremely adept at constructing purely mathematical pictures.” When works are mathematically described, they become legible to computers—reproducible, executable. Consequently, they leave production entirely. The artist now prescribes what will happen. These computer-legible prescriptions afford reproducibility to the works.

Reproduction splits into two main techniques: replication and execution.

Replication belongs to the world of mechanical duplication with the Xerox machine as its emblem. In replication, reproduction occurs through direct copying of a fixed original. The process is linear, automatic, and indifferent to content. Each copy carries the promise of sameness, free of degradation.

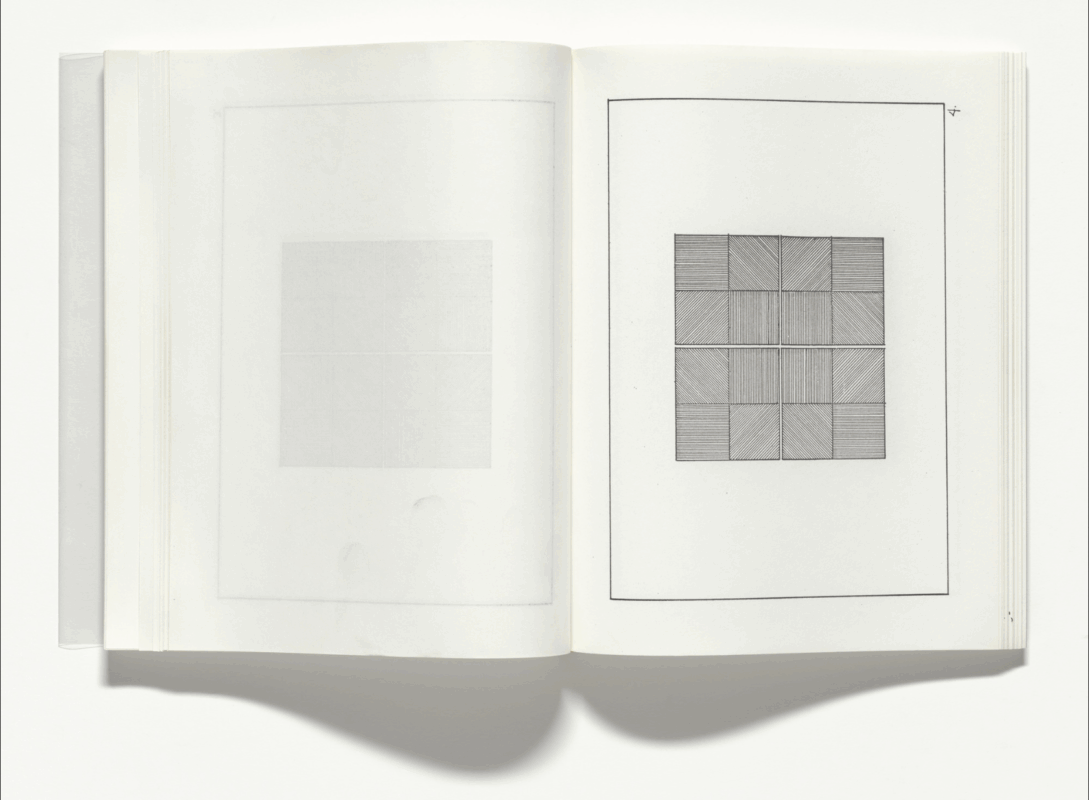

In 1968, The Xerox Book exemplified this paradigm at the intersection of art and reproduction. Curated by Seth Siegelaub, the project involved artists such as LeWitt, Carl Andre, and Robert Morris, each contributing works specifically designed for mechanical reproduction via the Xerox copying machine. LeWitt’s contribution consisted of drawn variations generated through simple, prescribed instructions. Here, the Xerox machine literalizes LeWitt’s own conceptual premise: “the idea becomes a machine that makes the art.”

Execution, by contrast, introduces reproduction as procedural iteration. Rather than copying a finished artifact, execution reproduces through the re-performance of instructions. This is the domain of rule-based algorithmic art, in which a set of instructions — not a physical object — serves as the original. Each instantiation is technically unique but structurally identical, reflecting strict adherence to the artist’s designed logic. Vera Molnár’s Interruptions (1968), for example, uses computer algorithms to systematically generate geometric variations within tightly bounded parameters: execution becomes an act of combinatorial repetition, entirely controlled but capable of producing internal difference.

Thus, while replication (the Xerox) seeks identicality through mechanical copying, execution (algorithmic systems) permits controlled difference through rule-based procedures. In other words, execution permits the inclusion of variability and randomness.

II. From Reproduction to Variation

As rule-based execution became established, artists began to explore its latent capacity for variation. The system still obeys the author’s rule set, but those rules can now contain parameters for variation: randomized inputs and probabilistic deviations, stochastic processes that generate difference within predefined bounds.

This motion represents a conceptual softening of the algorithmic: the system no longer merely re-performs fixed instructions but becomes an instrument through which the artist courts chance. Variability becomes desirable not as error, but as an aesthetic strategy or a way of introducing dynamism while retaining authorial oversight.

A great example here is Casey REAS’s Century (2023). The series of moving works, inspired by paintings and drawings from the 20th century, operates as framed variations on a theme. Though each work is different, the execution is circumscribed to an algorithm, making each unique yet related. The works, as the viewer can tell, follow a set of clear-cut rules but still are imbued with the magic of algorithmic variability executed by REAS’s own machine.

As discussed above, Vera Molnár described her work as an “imaginary machine,” allowing for small, incremental modifications within a tightly framed logic: a square may be deformed slightly, a line can be displaced by calculated randomness—at first with dice and later with a digital random number generator. Here, the artist remains the designer of the rule-space, but randomness enters as a partner within a sandbox of known outcomes. This phase marks the threshold where algorithmic art begins to open itself to processes it does not entirely contain. Notably, now the idea and randomness make the art and the computer executes it.

III. From Variation to Generation

From algorithmic generative variation to generative artificial intelligence, we witness a transformative leap. When multiple layers of variability intertwine, the process transcends mere execution and enters the realm of creation. At its core, generation might be reducible to mathematics: vectors, gradient descent, and optimization algorithms. Contemporary generation as performed by machine learning is, in essence, the study of statistical algorithms that can learn from training data and, thereafter, generalize their learned rules onto new datasets. Importantly, in the case of neural networks, one of the more popular architectures at the moment, the algorithms attempt to apply their derived rules on the new datasets and learn from their errors or “loss,”, They improve this metric over time by adjusting their weights across thousands of hidden layers through a process called backpropagation.

Some generative art today often involves these neural networks, which are by definition self-learning and self-modifying. These systems generate work not from a fixed logic defined by the artist, but from probabilistic inference, often producing outputs that are unpredictable, even emergent.

The work of Refik Anadol, specifically Unsupervised – Machine Hallucinations (2021), is a demonstration of the breadth of neural network-powered generative systems. Anadol provides the neural network with a dataset—in this case 138,000 works in the collection of the Museum of Modern Art—and lets it draw connections that are then expressed as hypnotic, colorful murmuration on a giant screen. The moving shapes are not set by the artist but are instead the expression of the vectorial linkages between the metadata. We don’t know why they look the way they do, they just do. The system draws the connections; we observe its visualizations.

The emergent generative system evolves into something more profound: a hyperobject, an abstract monolith that gazes back at us by reflecting patterns found in its training data. Vera Molnár’s notion of the “imaginary machine” evolves here into an “imaginary monolith,” an entity that embodies the numinous, offering us a profound encounter with an Other that is both artificial and inscrutable because of its size (thousands of layers cannot be read by one person). In this opacity lies a new form of meaning. The machine, whether real or imagined, becomes an object of contemplation that transcends its mathematical origins. Working with algorithms becomes a waltz with a vast and hazy randomness that invites us into a dialogue with the unknown.

Generative art is resonant now because it is an expression of this unknown. We’re enraptured by works that feel foreign, otherworldly, not unlike the mystic painter or the sacred iconographer. Interacting with generative art becomes a brush with the undecipherable. Where we once authored rules for the machine, we now find ourselves deciphering the rules embedded within it. The locus of meaning has shifted: from prescription to discovery.

Notably, we make the idea machine which generates the art and the computer produces it.

The artist using algorithmic generation is taking slow steps, away from the machine, like a careful yet anxious bomb-maker. The vectors multiply by the thousands and the layers teem with backpropagation. Ultimately, we are looking at the transmutation of numbers into works of art, but as artist Vasily Kandinsky in his Concerning the Spiritual in Art (1912) reminds us, “The final abstract expression of every art is number.”

A step-by-step set of instructions or rules for solving a problem or completing a task. A recipe is an algorithm, as is a computer program.

In object-oriented programming, refers to the creation process of an instance, or a specific object created using a set of parameters called a class. We can think of instantiation like the manufacturing of a car, where each build is dependent upon the setting of variables such as make, model, and color.

Relating to the combination of elements from a numerical set, arranged in no particular order.

Involves chance, probability, or randomness.

A subfield of artificial intelligence that uses generative models trained on large data sets to create new content—text, images, music, or videos—by mimicking the underlying structures, patterns, and styles of what it was trained on. Popular commercial generative AI tools like ChatGPT, Dall-E, and Midjourney use a chatbot feature where users can prompt the AI system using natural language rather than code. These large-scale systems are trained using trillions of data points collected from the internet and are controversial for the way they appropriate (and imitate) existing intellectual property and for their energy usage and environmental impact.

In machine learning, an optimization algorithm that minimizes errors between predicted and actual results.

A branch of artificial intelligence in which computers learn to make predictions or decisions by finding patterns in data, rather than being explicitly programmed for each task. The system improves its performance automatically through experience or the input of new data, getting better at tasks like recognizing images, translating languages, or making recommendations.

A type of learning algorithm associated with machine learning and artificial intelligence that is modeled and structured on how the human brain works. It uses interconnected nodes (like brain neurons) to process information, recognize patterns, and learn from data. By adjusting connections between nodes, it can improve at tasks like image recognition or language processing.

Short for “backward propagation of error.” In machine learning, a computation method used to train neural networks. Backpropagation uses the chain rule (of multivariable calculus) to calculate how changes to any of the weights or biases of a neural network will affect the accuracy of model predictions.

When simple elements interact to create complex behaviors or patterns that could not be predicted from the individual components alone. It’s how ant colonies organize without a leader, how consciousness arises from neurons, or how water molecules create waves: Something new emerges from simpler parts interacting.

Art created using autonomous systems like computer algorithms, AI models, or rule-based processes where the artist sets up initial parameters but then allows the system to independently produce or contribute to the

final artwork. The artist designs the process rather than directly creating every element, embracing randomness, complexity, and emergence to produce results that often could not be fully predicted beforehand.