Towards Ekphrastic Writing for Digital Arts

Regina Harsanyi

When art historians describe visual works, they often use ekphrasis. Ekphrasis can be defined as detail-driven language meant to transport readers or listeners with great vividness to works of art they have otherwise not witnessed. In service of ekphrasis, art history includes a developed, sophisticated vocabulary for discussing traditional materials and processes. Consider how an art historian might describe Ad Reinhardt’s painting, Number 1 (1951) in the Toledo Museum of Art’s collection. The following example approximates that tradition. It is intentionally ornate, overly specific, and just self-serious enough to signal an International Art English-esque elitism:

The composition features discrete rectangular forms in blues and purples on black, establishing modular relationships across Belgian linen support. Reinhardt attenuated oil pigments with turpentine, extracting binding medium through controlled siphoning to achieve matte surface quality with velvety tactility that alters paint film behavior. Each chromatic passage maintains spectral identity while engaging geometric syntax. Blues range from saturated to atmospheric tones; purples span burgundy to violet-grays. The tessellated field creates low-reflectance surfaces eliminating specular reflection. This manipulation of rheological properties and optical characteristics generates effects through calculated subversion of conventional material substrate, transforming pictorial representation into phenomenological inquiry.

This method of description demonstrates an art historian’s command of material vocabulary: understanding how specific pigments, canvas types, and application techniques directly create aesthetic effects or inform about a moment in time. The discipline can discuss not just what is seen, but how the artist’s technical choices, from support selection to oil extraction, generate specific insight into a work of art.

Yet when confronting art with digital components, art historians and critics retreat into vague terms like “virtual” or “immaterial,” as if these works somehow float beyond physical reality. This vocabulary gap reveals the underdeveloped language in art history and criticism for digital materials despite the fact art with digital components often does not demonstrate a fundamental difference in its physicality.

Art with digital components depends on equally precise physical processes, down to the electrons stored in microscopic capacitors, transistors switching at nanosecond intervals, and organic molecules in OLED (Organic Light-Emitting Diode) displays emitting light at specific wavelengths. This essay argues for developing a technical understanding of these very physical properties of the works and how they’re displayed, leading towards ekphrastic competence that treats bits and pixels with the same material specificity demonstrated above, bringing the same technical precision to digital media that art historians routinely apply to traditional materials.

Digital Ekphrasis

Software for digital art and design often still relies on skeuomorphism, offering “brushes,” “pencils,” and digital “canvas.” These metaphors emerged from necessity; early tools needed familiar references for users transitioning from traditional media. Yet this vocabulary obscures actual processes: mathematical functions executing across parallel processing cores, texture sampling from algorithmic noise patterns, and transparency calculations in specialized color spaces. Adobe’s “brush hardness” controls transparency values across pixel boundaries, not bristles and paint flow. Code is matter, whether raw or veiled through commodified software.

These seven short examples of technical, ekphrastic writing consider works from Infinite Images: The Art of Algorithms. Therefore, for this essay, the focus is limited to generative, algorithmic works. However, they’ll demonstrate how descriptive texts could be if art historians and critics developed a new taxonomy and understanding around digital materiality. The following examples will likely feel dense or unfamiliar to many readers; this unfamiliarity is precisely the point. Just as the Reinhardt description assumes fluency with traditional material processes, these examples assume fluency with computational processes that art criticism has yet to develop. A working taxonomy might include: processor protocols (how code runs), buffer structures (where images assemble), rendering pipelines (how pixels form), and signal domains (how data flows). A materially grounded description need not eclipse questions of virtuality or simulation; it can coexist with readings of disembodiment by showing how those very effects are in actuality produced in silicon and signal.

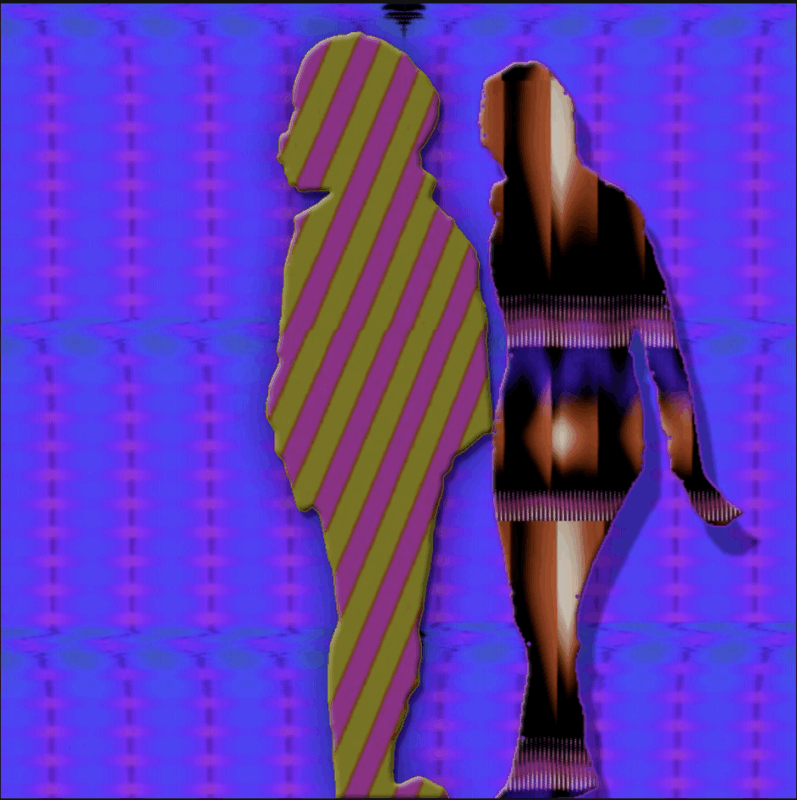

Operator, Human Unreadable

Operator (Ania Catherine and Dejha Ti) dissolves the boundary between flesh and data in Human Unreadable. Dance becomes computational material through systematic transcoding that preserves the essence of movement while hiding its human origin. Motion capture suits tracked Catherine’s gestures as streams of spatial coordinates. Each joint position was measured through optical sensors at 120 frames per second, velocity vectors calculated from positional changes and temporal relationships were stored as floating-point numbers that occupy specific memory addresses like digital fossils of corporeal motion.

Catherine’s arm extending through space generated cascades of numerical data that Operator’s custom downsampling tools converted through precise protocols, each layer stripping away human recognizability while retaining kinetic relationships. Their “hash per bone calculation” method processes individual joint data through mathematical functions, creating discrete computational signatures for each body part: shoulder, elbow, and wrist become abstract coordinates that preserve the gesture’s temporal signature but render it unreadable to human perception.

Legibility of the hash-per-bone process explains why the work feels simultaneously familiar and alien. Human movement is recognizable but with an inability to be traced back to Catherine’s specific gestures, creating the unsettling sensation of witnessing a body that exists only in computational translation.

When deployed through browsers, Human Unreadable routes these movement coordinates through WebGL graphics processing pipelines, where custom GLSL shaders transform the hashed data into vertex positions and gray-scale color values. The original dance gesture executes as parallel mathematical operations across hundreds of GPU cores simultaneously. Catherine’s physical motion becomes matrix multiplications in graphics memory, then electrical signals driving liquid crystal arrangements in display panels that emit the carefully calculated light perceived as abstract visual forms.

This computational architecture illustrates the relationship between digital palpability and the human body. Each transcoded layer: motion capture to coordinate data to mathematical hash to graphics processing to photon emission, preserves something essential about human movement while progressively abstracting it through material transformations. What emerges is dance reconstructed as an algorithmic process, creating what Operator terms “analog generative models” that produce new choreographic sequences through mathematical recombination instead of playback, suggesting that computation might be another form of embodiment rather than an absence.

Casey REAS, Century

Traditional art writing might describe REAS’ Century as an “interactive, geometric abstraction” shaped by keyboard input. But this conceals how the work functions at the level of code and execution. Century opens as a circle filled with colored lines and translucent ellipses. Pressing “1” activates a deterministic slicing algorithm: segments shift and scatter across the circular frame in the same encoded sequence. Pressing “2” restores the original arrangement. The deterministic slicing algorithm may explain why pressing “1” repeatedly produces exactly the same sequence of scattered arrangements. This computational predictability creates the satisfaction of control while the mathematical precision of the scattering pattern gives the chaos an underlying sense of order.

REAS first created Century in Processing, which converts sketches into Java source and compiles them into bytecode that runs on the Java Virtual Machine with just-in-time compilation, automatic memory management, and direct access to desktop OpenGL. For the web, he rewrote the piece in the open-source library p5.js, which runs in a JavaScript engine (for example, Chrome’s V8) and issues draw calls to WebGL or the HTML5 Canvas 2D API within the browser sandbox. That translation alters how the work uses processor instructions, allocates memory, and communicates with graphics hardware.

When Century draws, it often uses an off-screen buffer, a reserved block of memory where images assemble before appearing on screen. In p5.js, that buffer is a p5.Graphics object whose pixels live in a Uint8ClampedArray, a typed array storing four 8-bit values per pixel (red, green, blue, and alpha). The slicing logic repositions regions of this array through coordinate transforms. When transforms compute non-integer positions, the browser’s rasterizer rounds to whole-pixel coordinates, producing the thin seams visible between slices.

Rendering proceeds at up to sixty frames per second in sync with the display’s refresh cycle. Each frame clears the buffer, recalculates geometry, and forwards updated pixel data through the browser’s rendering pipeline. Performance depends on CPU scheduling, garbage collection pauses, memory bandwidth availability, and the efficiency of the Canvas or WebGL subsystem. These technical constraints are active material forces.

The choice of p5.js determines which processor instructions execute, how memory gets allocated and released, and how drawing commands queue for the graphics hardware. Century‘s visible artifacts and timing behaviors emerge directly from these computational relationships shaped by the artist’s determinations, demonstrating a way in which born-digital art operates through specific material constraints.

Emily Xie, Memories of Qilin

Emily Xie evokes mythological creatures from a physics simulation in Memories of Qilin, where a computational method called Verlet integration (a way of calculating how particles move and interact over time) drives connected points toward balance while keeping them in constant, subtle motion. The work starts with position coordinates for nodes that push and pull on neighboring points through springs, creating mathematical relationships that seek stability without ever quite achieving it. These calculations run in p5.js, where Xie adjusts numerical parameters until particle clusters form and shift in patterns that feel organic without depicting anything specific. The spring force calculations explain why the textile patterns seem to stretch and contract like actual fabric, knowing that mathematical tension drives the visual deformation makes the digital textures feel materially convincing rather than simply mapped onto abstract shapes.

Xie layers scanned textures from her personal archive onto the evolving particle structures through coordinate transformation, a process that maps image patterns onto moving forms by translating their positions mathematically. Each placement follows computational rules while carrying visual associations from inherited traditions. The qilin, a mythological beast, is not depicted formally but as an organizing principle for how forms appear and fade. In Chinese mythology, this creature materializes when the world becomes ready for change, embodying the space between recognition and mystery. The algorithm works similarly, generating moments of almost-familiarity that shift before they settle into fixed meaning.

The system mimics traditional textile work through computation. As the spring forces work through their directional tensions, node clusters stretch and contract in ways that suggest movement, growth. What appears as deep red or ink black comes from RGB (red, green, blue) color values calculated through algorithmic blending; the computer mixing colors mathematically rather than through pigment, yet somehow producing the same visual resonance as traditional palettes rooted in cultural memory.

When browsers run the work, JavaScript engines send calculations to graphics processing units where hundreds of specialized cores update position coordinates and force relationships simultaneously. Each frame rebuilds the entire particle system from basic mathematical principles, storing no predetermined sequence, only computational potential that unfolds in real time. This process creates something that feels alive and unpredictable, qualities usually associated with traditional media, achieved instead through rigorous mathematical procedure.

Memories of Qilin uses controlled mathematical variability to prevent exact repetition while maintaining internal consistency. The physics simulation generates interpretive ambiguity through computational precision, proving that algorithmic systems can produce the same sense of mystery that traditional artists achieve through deliberate suggestion. The images viewers encounter are artifacts of systems actively generating new possibilities rather than displaying pre-determined images, showing how cultural memory might flow through computational emergence as effectively as through the traditional stories that first imagined creatures like the qilin.

These three works, Century, Human Unreadable, and Memories of Qilin, share a technological foundation that discloses the material conditions of born-digital art, even (and especially) where it is commonly assumed to be immaterial. Each was created through Art Blocks, a platform that deploys generative scripts to the Ethereum blockchain. When a collector mints one of these works, their interaction with the interface triggers a sequence of physical operations distributed across thousands of machines.

A pseudorandom seed is generated from block-level data, computed by validator hardware through billions of transistor-level switches embedded in silicon. These operations are carried out across a globally distributed network of computers that manage and transform electrical charge using processors, memory, and storage systems. In the case of Art Blocks, minting does not retrieve a finished image or stored file. It initiates the conditions under which the artwork comes into being. The script is written and deployed to the Ethereum blockchain in advance, but the output is resolved only when a collector initiates the transaction. At that moment, the network assigns a numerical “seed value” based on factors such as block height, timestamp, and sender address. This value is passed into the artwork’s codebase, which uses it to determine the work’s formal structure. Each output exists as the result of this event; an interaction between code, protocol, and machine-level computation.

And the Ethereum network itself does not persist in the abstract. It is sustained by machines: validator clients running execution and consensus software, solid-state drives storing historical chain data, and operators tasked with ensuring uptime and version compatibility. Persistent storage of each artist’s code depends on an ongoing choreography of software updates, hardware maintenance, power availability, and network reliability.

To describe these works accurately is to speak about entropy generation, instruction execution, memory access, and real-time rendering. They are mechanisms through which the artwork exists, not technical footnotes.

William Mapan, Distance

William Mapan traces how visual information travels between different material forms in Distance, showing that the same image can exist as computational math, paint chemistry, and optical data while maintaining visual continuity despite material transformation. His practice involves creating compositions through JavaScript algorithms, translating them to gouache paintings, then converting painted textures back into algorithmic parameters, a process that reveals how information persists through radically different material states.

When Mapan generates colors using HSV calculations in JavaScript (mathematical operations that organize numerical values to specify hue, saturation, and value/brightness), he then recreates these same colors by measuring and mixing gouache paint using only primary colors. Both processes require precise material manipulation: his code arranges numerical data to define color relationships, while his paint mixing arranges cadmium red, ultramarine blue, and cadmium yellow in exact proportions to achieve identical visual results. The gouache’s gum arabic binder and pigment particles create molecular structures that scatter light at specific wavelengths, just as his algorithms create data structures that define the same wavelength specifications through mathematical relationships.

Notable insights emerge when Mapan photographs these painted surfaces and feeds the captured textures back into new algorithmic parameters. Digital camera sensors convert the molecular light-scattering behavior of dried paint into electrical charges, transforming one material arrangement back into another while preserving visual characteristics that allow the cycle to continue. This feedback loop reveals that Distance functions as a demonstration that information can move fluidly between material arrangements: mathematical, chemical, and optical, each maintaining enough structural integrity to inform the next transformation. This material cycle may illuminate why the algorithmic and painted elements feel visually continuous rather than like digital simulation of traditional media. The technical translation process preserves enough visual information that the boundaries between computational and tangible pigment become genuinely unclear.

Each painted patch represents information that has traveled through multiple material states: mathematical calculations stored as electrical charges, then pigment molecules arranged by brush movement, then photons scattered by paint texture, then electrons generated in camera sensors, finally becoming compressed data patterns written to digital storage. Understanding this material journey reveals how Distance demonstrates that digital and traditional media share the fundamental capacity to carry and transform information through matter, suggesting that our appreciation of computational art depends on recognizing these material continuities rather than treating digital processes as somehow separate from physical reality.

LoVid, Hugs on Tape

For Hugs on Tape, LoVid processes personal recordings of embraces collected during the SARS-CoV-2 pandemic through a system that translates digital image sequences into analog signal interference. Videos recorded on smartphones are decoded into continuous electrical voltages, where each pixel’s luminance level determines waveform amplitude and frequency. These signals are routed into custom-built video synthesizers composed of analogue circuits that modulate incoming voltages in real time. The synthesizers introduce chromatic complexity through waveform interference, feedback routing, and phase offsetting, altering the underlying signal before any frame-based encoding reoccurs.

The visual result is shaped by the structure of the body in the original footage. The electrical properties of the image: brightness gradients, edge intensities, and spatial distributions, condition how synthesizer circuits alter the signal. The presence of the body acts as a modulator, defining where interference patterns condense or disperse. Color is produced through voltage-based modulation, not applied after the fact. The artist’s hands move across the synthesizer, patching cables, turning knobs, adjusting voltage in flowing motions; a dance between hand and machine. The image responds to each gesture, shifting and bending with every turn and every deliberate touch. The body is indexed by density and movement rather than outline or contour.

After analog processing, the signal is re-digitized through video capture hardware and imported into editing software. In Adobe Premiere and After Effects, LoVid applies operations such as tiling, mirroring, and temporal repetition. These manipulations recompose the already-transformed footage, extending analog interference into new spatial arrangements. The artists painstakingly draw the silhouettes of each body by hand in Photoshop, creating the frame-by-frame stop-motion animation. These animated elements are then composited with the video patterns to create the final work. Each layer of the process preserves material constraints introduced by the previous one. The editing environment does not overwrite the analog signature but recombines it through digital array manipulation.

The work demonstrates how signal passes through computational and electrical systems without being abstracted from material conditions. LoVid’s video synthesizers operate as voltage processors that introduce form through circuit behavior rather than software logic. The final image accumulates its properties through sequential transformations, each of which imposes its own limitations and affordances. LoVid’s system traces the pathway of an image across incompatible signal domains, allowing each format transition to leave a visible imprint on the result.

0xDEAFBEEF, Glitchbox

0xDEAFBEEF’s Glitchbox reveals the material conditions of its own production: processor time, memory layout, and system throughput. Written deliberately by hand in C and compiled into WebAssembly, the program runs as a compact executable that carries no assets and relies on no external libraries. All audio and image are generated during runtime.

Sound is produced one sample at a time through arithmetic operations that calculate pitch, amplitude, and modulation shape. Each value is computed in the moment and passed directly to the output buffer. The sound is not retrieved, stored, or replayed. It exists only while being processed.

The image is rendered from a 192px grid. Each pixel holds a single 8-bit grayscale value. Excluding color channels reduces the size of the memory buffer, allowing the frame to be updated quickly enough to remain synchronized with the audio. The low resolution matches what can be recomputed and passed to the display at the necessary rate. Each redraw erases the previous frame and replaces it with a new one, constructed entirely from recalculated values.

The system is closed and recursive. Each cycle executes the same operations on a new set of inputs. There is no media archive. No part of the output is retained beyond its frame or sample. The viewer experiences the system sustaining itself through continuous computation under constraint. The flicker rate, waveform character, and overall form of the piece reflect limits imposed by available memory, processor speed, and the timing of execution. The real-time generation explains why Glitchbox feels immediate and present rather than like watching a recording, knowing that each frame is calculated in the moment makes the flickering feel like witnessing computation thinking.

Parameters exposed to the viewer modify internal variables. Each change produces a new output using the same logic. When saved, only the parameters are recorded. The version is regenerated by re-executing the program with those values.

The form of Glitchbox emerges from timing loops, data structures, and allocation limits. Each decision, such as pixel format, audio sample rate, or redraw frequency, reflects a very physical tradeoff between complexity and speed. The image shows what can be drawn fast enough. The sound shows what can be computed without delay. Every element that appears is the result of conscious constraints being met.

Zach Lieberman, Color Blinds Study

In Lieberman’s Color Blinds Study, color pulses through a scaffolding of stripes that stretch across the screen, folding and expanding like blinds. At one moment, the palette hovers in dusty lilacs and peach-grays, striated into clean horizontal bands. Seconds later, the bands have thickened and deformed. Within them, blotches of blue, yellow, and red bloom from within, held in suspension by soft curvature. Then, as if responding to a pressure not seen, the entire composition tilts its chromatic axis, fading toward night tones and spectral noise.

Lieberman authored the work in openFrameworks, a C++ toolkit that provides direct access to OpenGL graphics processing. The motion emerges from time-based parameter calculations that modify stripe positions, color values, and geometric transforms across rendering cycles. Each frame recalculates the entire composition from mathematical functions rather than playing back stored animation data. The system generates color through algorithmic blending operations and applies procedural noise to create textural variation within precise geometric constraints.

The work runs as compiled executable code that sends drawing commands directly to the graphics processing unit. Vector calculations determine stripe positioning, while fragment shaders handle color interpolation and blending operations. The continuous visual changes result from clock-time driving mathematical functions that modulate amplitude, frequency, and phase relationships. No predetermined sequence exists. Each frame represents the current state of ongoing calculations.

When compressed into its h.264 format, the computational process transforms into encoded video data. The real-time mathematical generation becomes fixed pixel arrays stored as compressed blocks with temporal prediction algorithms. Frame rates lock to encoding specifications rather than computational timing. Color values shift from live calculation to decoded approximations filtered through compression artifacts.

The images captured from the piece betray its behavior. No single frame suffices. Each still freezes one phase of a gradient that only makes sense in transit. A muted band with a sudden peak of blue in its center, a smear of red sliding toward brown, or a ring of peach brightening into lemon before sinking again. The work lives in their succession and the specificity of digital chrominance.

Color Blinds Study demonstrates how openFrameworks’ direct hardware access enables real-time geometric recalculation that produces the work’s characteristic temporal unpredictability. The stripe-based visual system depends on floating-point arithmetic units continuously computing positional offsets and color interpolations, creating chromatic transitions that cannot be predetermined or exactly repeated. When the work transforms from live computation to encoded video, this mathematical variability freezes into fixed pixel sequences, revealing how the same visual forms can emerge from fundamentally different material processes: real-time calculation versus data playback. The work’s aesthetic depends on this computational timing, showing how C++ execution speed and graphics pipeline efficiency directly shape the rhythm and intensity of color changes that define the viewing experience.

Conclusion

These examples reveal that computational art operates through the same material reality as any work in the Toledo Museum of Art’s collection. A new material epistemology for computation must be considered. Digital artworks are not immaterial. They are produced through voltage shifts, instruction execution, memory allocation, and signal modulation across physical substrates. Computation takes place in matter. Its outputs are structured by processor behavior, timing architectures, and display technologies that operate within defined physical constraints.

Critical engagement with digital art requires a shared taxonomy built around these systems. Rendering logic, buffer states, procedural structures, and data formats shape what becomes visible or audible. They constitute the material basis of the work. Adopting this approach will require new education models for curators, critics, and journalists alike, along with a spirit of collaboration between art historians and technologists.

To write ekphrastically about art with digital components is to describe these systems with precision. It means treating computation as a physical process and recognizing code, hardware, and signal as materials. Formal analysis must account for how the work comes into being through execution, timing, and transformation within machine environments.

Building this vocabulary allows media artworks to be seen in terms equal to strictly traditional artforms. The surface appearance of a computational work reflects physical systems operating in real time. Describing those systems is central to meaningful arts writing.

Floating-point numbers are used to store approximate numeric values that are not integers, such as the numeric value of pi (3.14159…) where the decimal point is “floating”—that is, not always in the same position.

The process of reducing the number of data points within a set, usually for storage or processing purposes.

A method of keeping sensitive data, such as an account password, secure. A hash consists of a one-way mathematical function that encrypts data of any size into a string of characters, typically shorter in length than the input.

A programming language that determines the appropriate levels of light, darkness, and color during the rendering of a three-dimensional scene.

A command sent from the CPU (central processing unit) that tells the GPU (graphics processing unit) what to draw and how to draw it.

In object-oriented programming, a fundamental data structure that contains elements of the same type. Each element can be accessed using an index, which corresponds to its place within the array order. Indices start with 0 and ascend numerically. For example, the array areaCodes = [216, 419, 614] holds the area codes, in order, for Cleveland (index 0), Toledo (index 1), and Columbus (index 2), and these values are all integers. To return Toledo’s area code of 419, a programmer would run a command similar to: print(areaCodes[1]).

The conversion of the coordinates of a point or set of points from one coordinate system to another. Coordinate transforms allow programmers and computer engineers to switch between different ways of describing position, so that machines can understand and work with objects in different spaces.

A process built in to some programming languages that automatically frees up memory by removing objects no longer in use by the program.

When simple elements interact to create complex behaviors or patterns that could not be predicted from the individual components alone. It’s how ant colonies organize without a leader, how consciousness arises from neurons, or how water molecules create waves: Something new emerges from simpler parts interacting.

A digital ledger that stores information across many computers instead of one central location. Each new record links to previous ones, forming a secure chain of “blocks” (packages of encrypted data) that is extremely difficult to alter or hack. Blockchain technology has been used to create cryptocurrencies and non-fungible tokens (NFTs) due to its ability to securely record transactions without requiring the authentication of a centralized regulatory body.

The process of creating and registering a new digital asset, such as an NFT (non-fungible token) or cryptocurrency token, on a blockchain network. It transforms digital content into a verified, tradeable item with recorded ownership, similar to how traditional mints produce physical coins or currency.

A number (or vector) that initializes a pseudorandom number generator, an algorithm for generating a sequence of numbers that approximates the properties of a random number sequence. The sequence generated is not truly random, because it is determined by the initial value of the seed.

The process of subtracting the value of one waveform from the value of another, which manipulates the underlying signal created from decoding smartphone videos, for instance.

A measure of how many units of information can be processed over a communication network within a given time frame.

A temporary storage location for outgoing data.

A function or series of functions used in computer graphics to generate a specific image texture.

Visible and/or audible distortions or flaws within an image, audio, or video file. Compressing a file, and the consequential loss of data during the process, results in these artifacts appearing.